There's an untapped opportunity for AI in edge computing; who can win the global AI race; companies investing billions in AI infra; Salesforce shifts its AI strategy; how AI creating a skills gap

ByteDance's chatbots dominate in China and other parts of the world; Anthropic's AI principles irk the White House; Accenture CEO on why humans are still important in the age of AI

This week, I was at the Oxford Generative AI Summit to talk about how AI is making software sprint, with hardware doing its best to keep up. My panel was moderated by Kai Nicol-Schwarz from Sifted and included XMOS’s CEO Mark Lippett, Luffy’s COO John Shaw, and Hailey Eustace, founder of Commplicated and an early-stage deeptech investor.

My first observation is that data center demand is compounding at breakneck speed. If a few years ago, AI-related data center buildout was influenced by the training requirements of scaled models, inference is now dominating workloads, as models stop posing for benchmarks and start doing real work. The energy industry is bracing for multi-year, trillion-dollar buildouts and we’re already seeing the effects of demand outstripping supply in terms of energy bills going up by double digits.

That’s because once you’ve got swarms of agents scheduling, drafting, reconciling, and hounding your backlog all day and all night, the baseline load is no longer spiky but continuous. We’re at a stage where we’re industrializing inference, not just renting burst capacity. A great example of where AI agents are starting to deliver value is in regulated industries such as financial services where they can be deployed for mission-critical processes. FlowX.AI has built dozens of multi-day or multi-goal agents with self-optimization loops; they run commercial lending, fraud detection, or customer support inside banks such as State Street, UniCredit or OTP Group.

The conversation then moved away from the cloud to the edge. And this is the part Wall Street still underprices: AI adoption won’t be confined just to hyperscalers; it can be soldered onto boards, flashed into microcontrollers, and embedded in wearables. Cheaper, faster and closer to the physics.

XMOS’s CEO described a generative SoC system they’re building that lets anyone specify system behavior in plain English and have the chip “compile” to that intent, collapsing design cycles and expanding the set of companies that can credibly ship custom silicon.

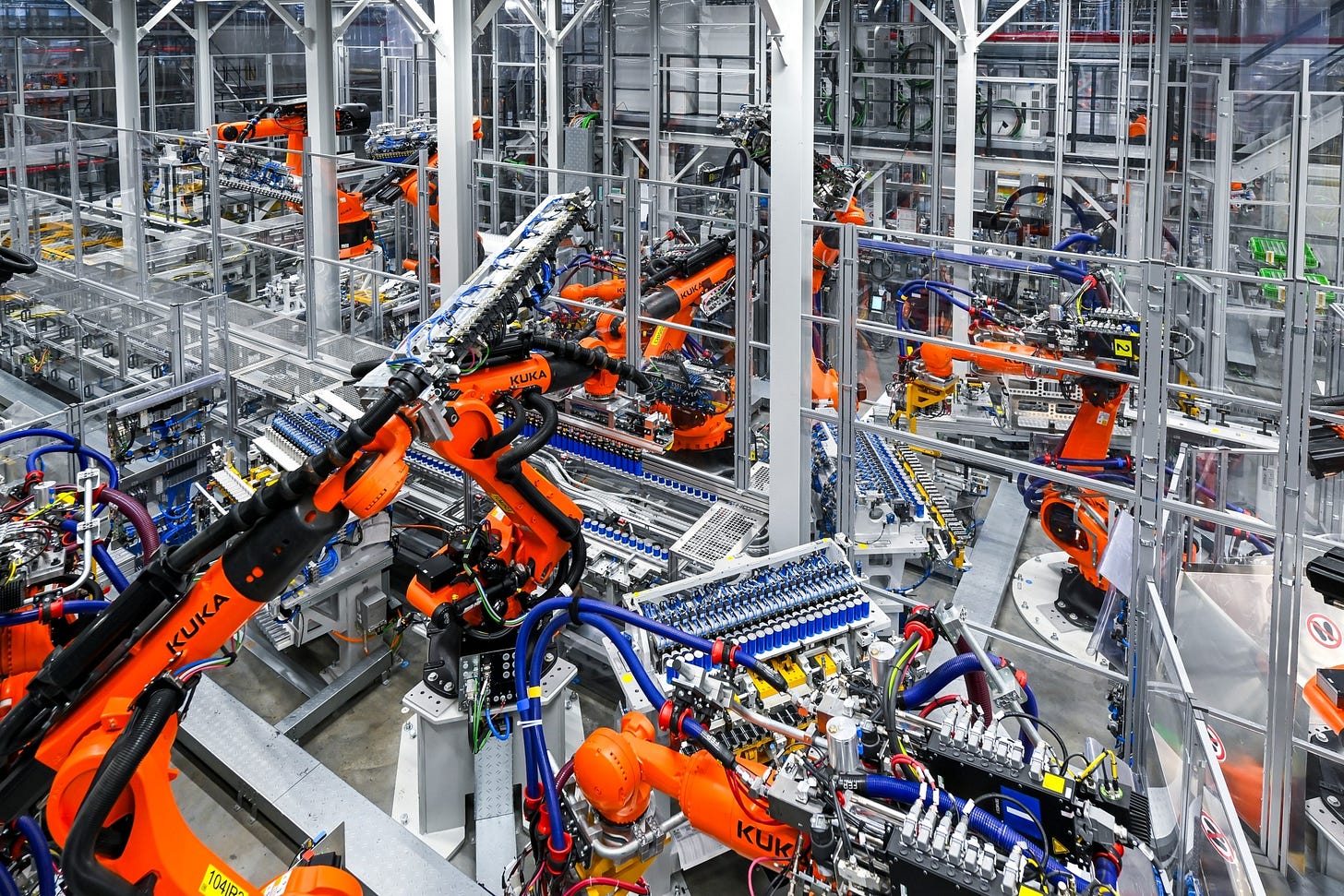

Luffy’s COO made some really great observations about at the factory floor, using the example of the BMW plant that makes MINI cars. His thesis was that we can swap out brittle PID loops for small neural networks running inside the microcontrollers already deployed in factories. If do that at scale, the assembly line robots shrink and factories get more energy efficient.

The more interesting point he made was about lifecycle reality, something that I also experienced inside Ocado’s robot warehouses: in many factories or warehouses with moving parts, equipment ages and tolerances wander, and the lovingly tuned factory you commissioned in March is mysteriously running behind by October. But if we can put adaptive AI into the microcontrollers driving the automation, it can track drift across force, distance, time, pressure, torque and whatever else the physics throws at it, while rejecting disturbances that used to trigger maintenance tickets.

Finally, I brought up a blast from the past: IoT devices and wearables, explaining why they might become interesting because all that data they stream to the cloud will finally be turned into useful insights. Sometimes, they’ll also be able to run AI models locally for quick wins. I talked about my Meta Ray-Bans and a near future where the glasses support a compact world model locally, and call out to the cloud only when they hit an uncertainty cliff. You don’t always need a gigawatt data center connected to your wearable; sometimes, a small, well-tuned prior that understands your environment and your routine, and a smart policy about when to escalate are preferable.

If there was a consensus on the panel, it was this: put the intelligence where the entropy is. A plant line that never runs the same way twice. A pair of always-on AI glasses listening for a wake word. A street where the radio spectrum goes feral at rush hour.

Will the cloud go away? Of course not. But if the last two decades of software development consolidated most economic activity among a few American or Chinese megaplatforms, I continue to believe this AI wave might offer opportunities for European companies with deep industrial experience to build solutions made locally, safely and fast.

And now, here are the week’s news:

❤️Computer loves

Our top news picks for the week - your essential reading from the world of AI

Bloomberg:

Reuters: From OpenAI to Meta, firms channel billions into AI infrastructure as demand booms

The Information: Salesforce CEO Shifts AI Strategy as OpenAI Threat Looms

Bloomberg: Anthropic’s AI Principles Make It a White House Target

WSJ: OpenAI Wants City-Sized AI Supercomputers. First It Needs Custom Chips.

Time: Accenture CEO Julie Sweet on Trust in AI, Building New Workbenches, and Why Humans Are Here to Stay

WSJ: AI Is Juicing the Economy. Is It Making American Workers More Productive?

Bloomberg: Europe Fights for AI Independence to Avoid Becoming Tech ‘Colony’

WSJ: Why AI Will Widen the Gap Between Superstars and Everybody Else