The AI grift, a tragedy of mainstream media failures in three acts; the US and UK sign tech deal; Google's plan to generate more AI training data; meet the new AI startup whisperer

Why AI won't replace junior engineers; the AI movie factory is ramping up; Hangzhou is China's answer to Silicon Valley; life at Lovable; how people use OpenAI and Anthropic's chatbots

The AI grifters, once dressed in TED-stage gravitas, are now walking into the warm embrace of Steve Bannon’s WarRoom podcast or gleefully boosting anti-AI speeches from national conservatism conferences. It’s their right to do so, of course.

But I have to confess I’m a bit confused. How did these bastions of liberal values fighting the “good fight” against the evil empires of technology transition from stern op-eds in the New York Times and The Guardian to joining the MAGA mediasphere?

Allow me to present to you a tragedy in three acts.

Act I: Elon and the doomsday media beat.

We start with Elon Musk, the metronome of media attention. Years back, he warned that AI could be “more dangerous than nukes,” and the press obliged with the usual fevered coverage. “AI will kill us” is catnip to SEO traffic-obsessed news desks; Elon Musk delivered clean headlines and moral urgency, and the halo of a visionary founder. I’m using the past tense because now he mostly delivers incoherent rants on giant screens at weird rallies.

Many of his initial AI doomer takes were soft on evidence and heavy on vibes, yet they set the tone. If a household-name billionaire says the robots are coming, you don’t worry about the footnotes; you clear up the front page and push a media alert in the news mobile app.

Act II: From research labs to “war rooms.”

Next came the hardcore AI doomers, people like Max Tegmark or Eliezer Yudkowsky who quiver at the sight of large auto-regressive models. Tired of being interviewed by “MSM” journalists for glowing profiles in Wired and given main stage slots at tech conferences, they started toying with ideologically charged venues like Steve Bannon’s WarRoom. The move makes strategic sense if you believe policy is downstream of airtime.

But it also marks a break from the academic grounds of MIT or Berkeley they once claimed as their home turf. When existential risk talk jumps from white papers to culture-war studios, the message doesn’t merely reach a new audience; it changes.

“Pause AI” morphs into trolling in front of Google DeepMind's office for likes on X.

Act III: Endorsements with January 6 baggage.

Then there’s the more explicit political alignment from another class of AI grifters - the skeptics. They’re busy endorsing politicians who made their names fighting platform moderation and fact-finding institutions, not building them.

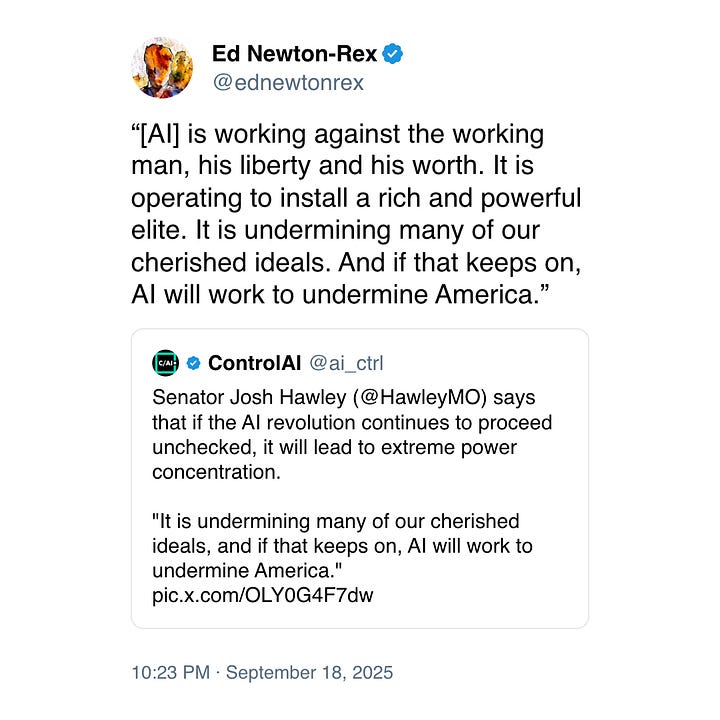

For example, Ed Newton-Rex fashions himself as a hardline copyright advocate writing op-eds in The Guardian and appearing on Time’s list of most influential people in AI. He also has an alliance of creative industry representatives hanging on his every word.

But what his loyal fans might not know is that he’s recently been hard at work publicly boosting speeches from US senator Josh Hawley. Hawley isn’t just another conservative vote; he’s a symbol tied indelibly to January 6. You don’t get to shrug off that association with a “both sides are captured by Big Tech” narrative. Promoting his views is a choice. It says: my allegiance is with the political figure who raised a fist to a crowd that later stormed the Capitol.

I asked Mr Newton-Rex about his newfound love for those on the right of the American political establishment and about some of his other conflicts of interest. He chose to block me while complaining about the "fragility of AI CEOs" and being blocked by Demis Hassabis.

This tragedy didn’t happen in a vacuum. There were several forces that did the bending.

First of all, AI grifters provide an endless supply of frictionless content. It’s dramatic, universal, and requires no prerequisite knowledge. You can do a hit-piece, run a moody stock photo of a glowing robot eye, sprinkle in “Yudkowsky” or “paperclip maximizer,” and call it a day. In an era when traffic targets wag the editorial dog, journalists are rewarded for stories with top-hat wearing weirdos. “Doom” beats “domain-specific safety engineering” in every headline A/B test ever run.

Secondly, when you’re a reporter on the tech beat, access is alpha. AI grifters are unusually willing to take calls, grant interviews, and gift journalists the comfort of certainty: “This is existential,” they keep saying. That kind of clarity is irresistible when you’re staring down an editor who wants a take by 5 pm. The more airtime they got, the less they had to defend the scaffolding of their arguments.

Finally, the far-right media ecosystem is a machine for converting disaffection into influence. It thrives on elite betrayal stories, and the AI grift slides neatly into the groove: coastal elites built a god-computer that will replace you; the experts are lying; only my non-profit/public benefit corporation can stop them. The alignment feels inevitable the moment we move away from rational conversations to moral panic and expletives.

So what now for mainstream outlets that platformed these voices, giving them op-eds, expert quotes, and sympathetic profiles on the premise that the authors were issue-driven rather than ideology-bound?

Imagine being the editor at The Guardian who greenlit the columns from Ed Newton-Rex and finding out that he is now aligning himself with a January 6-adjacent standard bearer. Do you run a note to readers? Do you reassess your roster? Or do you keep quiet and hope nobody notices that yesterday’s “AI truth-teller” is today’s culture-war proxy?

Regardless, if a columnist’s advocacy predictably funnels into a movement that celebrates institutional demolition, that’s not mission creep, that’s the mission revealing itself. The question for editors isn’t whether to ban people with right-wing views (they shouldn’t!); it’s how you can stop validating a pipeline that is a profit machine for the AI grift.

For those who are still confused about how that pipeline works, the sleight of hand is simple: start with abstract existential risks (unaligned superintelligence, artists’ rights, etc.), translate them into immediate policy demands (pause research, expand liability, empower regulators), then hand the microphone to politicians whose broader agendas are explicitly hostile to expertise and pluralism. You get the optics of high-minded caution and the outcomes of a panic-driven politics that rarely builds anything, let alone safe AI.

Meanwhile, the actual work (mitigating consumer harms or building enterprise-grade security) has to fight for oxygen against a rolling Chyron of apocalypse. If you’re an honest researcher trying to craft rules for model access or benchmark leakage, you’re crowded out by people talking about the heat death of humanity on podcasts that also push gold coins and male vitality supplements.

If you want a fix, start by treating catastrophic claims like biotech claims: give us extraordinary evidence or you will never see the front page. Then, prioritize researchers who publish in academic, peer-reviewed conferences over influencers who ship vibes. And when advocates pick teams in the culture war, update priors publicly.

The story of AI is still being written. But the first draft has to stop mistaking panic for rigor and celebrity for credibility. Otherwise, we’ll keep retracing this loop: doom, platform, pivot, backlash, repeat. And each time, the politics will get a little louder, the policy a little dumber, the public a little angrier, and the technology a little less governed.

And now, here are the week’s news:

❤️Computer loves

Our top news picks for the week - your essential reading from the world of AI

The Guardian: What is new in UK-US tech deal and what will it mean for the British economy?

The Verge: Americans want AI to stay out of their personal lives

Sifted: Pleo CTO Meri Williams on why AI won’t replace junior engineers

The Hollywood Reporter: The AI Movie Factory Is Ramping Up

MIT Technology Review: How do AI models generate videos?

Bloomberg: Are Trampoline Bunnies and Dog Podcasters the Future of Entertainment?

Sifted: Life at Lovable: cracked hires, 17-year-old coders and no shoes

Business Insider: A key type of AI training data is running out. Googlers have a bold new idea to fix that.

Sifted: Aleph Alpha: Can Germany’s one-time OpenAI challenger bounce back?

Business Insider: Meet Silicon Valley's new AI startup whisperer