Mixture of Experts LLM shows real promise in healthcare; the future of AI is multimodal and multilingual; why AI is having a 1995 moment; a closer look at diffusion transformers;

French startup Mistral hopes to take on OpenAI; Meta's V-JEPA takes AI in a non-generative direction; how businesses are using generative AI; AI warfare is here; Alibaba shows off lipsynchin model

At the end of 2023, MBZUAI – the world’s first AI university – set up the Institute of Foundational Models to accelerate the research and application of generative AI. The Institute has spent the last few months bringing together top AI scientists, engineers, and experts from around the world to develop large scale, reusable and affordable AI models that can be adapted to a wide range of sectors and industries.

Last week, I had a chance to visit MBZUAI and meet some of the teams from the Institute so I want to share what I learned with you and introduce five foundational models that were just released a few days ago. These models include open source code and datasets, plus web applications that you can try out - I encourage you to do so!

But more importantly, these models illustrate the university’s goal of bringing AI to the world, whether it’s by applying interesting techniques like Mixture of Experts to healthcare, or by building multimodal, multilingual models that perform just as well in Arabic or Hindu as they do in English, or by building an LLM that can be trained and run on affordable hardware rather than high-end GPUs.

Let’s start with BiMediX: it’s the world’s first bilingual medical LLM that outperforms GPT-4, Med-PaLM-540B and Med42, in English and Arabic, on medical benchmarks, including medical board exams.

While its multilingual capabilities are remarkable, this is also one of the first uses of Mixture of Experts (a technique recently pioneered by Mistral) for a medical LLM which provides a boost of up to 4x in performance at inference compared to traditional large models.

Potential use cases for BiMediX include virtual healthcare assistants and telemedicine - think about having access to a family doctor 24/7. But what’s also interesting is you can use it for medical report summarization and clinical symptom diagnosis, or even medical research. So for example, you could give it a report from a specialist doctor written in medical jargon and it will interpret it for you in plain English - or Arabic. That latter use cases is very relevant for me: I saw an eye doctor recently and I was sent a medical letter after my visit which I struggled to comprehend. I fed that letter to BiMediX and it explained that essentially my eye’s cornea was thinner than normal, which caused some abnormalities in the readings of my eye pressure!

BiMediX was tested with medical doctors in the United Arab Emirates and India, including general practitioners and specialists from different departments like ENT, radiology, dentistry and surgery. These doctors verified the model’s outputs in their specific areas of speciality. MBZUAI also consulted Arabic native speaker doctors who verified the quality of the Arabic responses. This contributed to the creation of as dataset that includes over 200,000 high-quality medical dialogues that enrich the model's training material.

You can try BiMediX for yourself here, access the code on GitHub, and read the paper on arXiv.

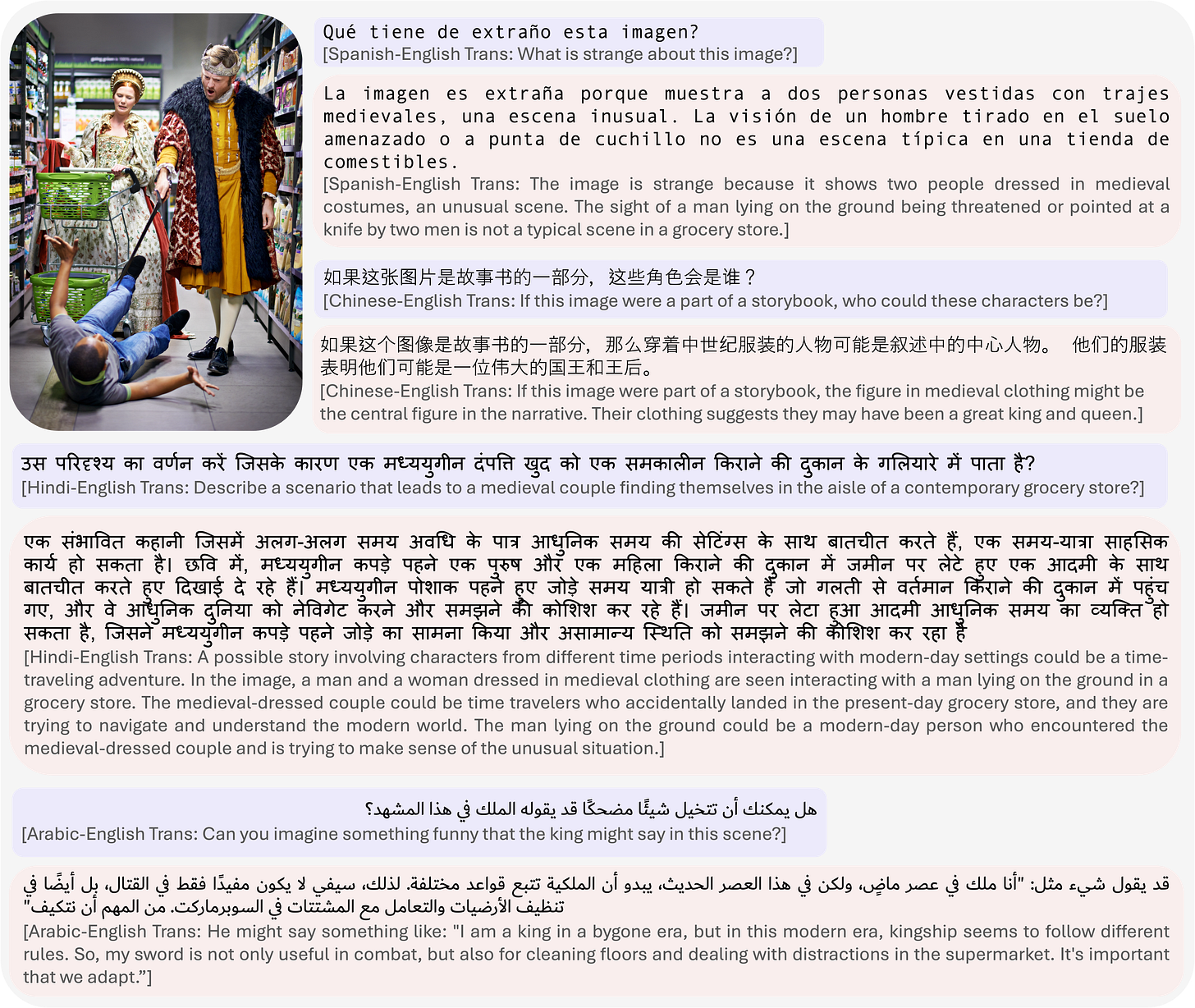

Next up, PALO: it’s the world’s first large multilingual, multimodal model to offer visual reasoning capabilities in 10 major languages, including English, Chinese, Hindu, Spanish, French, Arabic, Bengali, Russian, Urdu, and Japanese, spanning a total of 5 billion people (65% of the world population).

PALO takes a semi-automated translation approach, using a fine-tuned LLM to adapt the multimodal instruction dataset from English to the other languages, thereby ensuring high linguistic fidelity even for low resource languages such as Urdu or Bengali.

The model comes in three parameter variants - 1.7B, 7B and 13B - and was developed in partnership with the Australian National University, Aalto University, The University of Melbourne, and Linköping University.

You can try PALO here, check out the code on GitHub, and read the paper on arXiv.

Moving on to GLaMM: the first-of-its-kind LLM capable of generating natural language responses related to objects in an image – you can think of it as a more enhanced and richer version of image captioning.

Unlike existing LLMs, the model accommodates both text and visual prompts, facilitating enhanced multimodal user interaction.

For example, visually impaired users can ask the model to describe particular objects in an image in greater detail (“If a photo includes a tree, what kind of tree is it or where is it in the photo?”). Another use case is to replace an object in an image with another - for example, replace a house with a block of flats or a chair with a table in a photo. Or you can even generate new objects to replace existing ones which could be useful for e-commerce apps or photo editing software.

The model is developed in partnership with the Australian National University, Aalto University, Carnegie Mellon University, University of California - Merced, Linköping University and Google Research.

You can read the paper here and try it out here.

GeoChat is a large vision model which was just accepted at CVPR, one of the largest computer vision conferences in the world. GeoChat can describe in great detail satellite images and can answer specific questions about them; for example, how many houses are in a picture and what is their roof color. It could really improve the way we use an app such as Google Maps and help us find places faster on a map.

There’s no public demo for GeoChat yet but you can have a look at what it can do on the project’s GitHub page.

And finally, MobilLlama is a small language model (SLM) that is the top-performing model in the class of sub-one billion parameter models. The model can run on a phone with ultra-fast inference and has been optimized for fast training on limited compute resources so you won’t need a large cluster of GPUs to make it work.

You can prompt MobilLlama via this chatbot on Hugging Face, read the paper here, and check out the code on GitHub.

I am very excited about these models and for a future where AI is accessible to and built by more than just two or three countries in the world.

And now, here are the week’s news:

❤️Computer loves

Our top news picks for the week - your essential reading from the world of AI

The future of AI video is here, super weird flaws and all [Washington Post]

AI is having a '1995 moment' [Business Insider]

AI boom sparks concern over Big Tech’s water consumption [FT]

How a Shifting AI Chip Market Will Shape Nvidia’s Future [WSJ]

Meet the French startup hoping to take on OpenAI [The Economist]

AI is revolutionising the biggest industry you’ve never heard of [Sifted]

Why Meta’s V-JEPA model can be a big deal for real-world AI [VentureBeat]

Diffusion transformers are the key behind OpenAI’s Sora — and they’re set to upend GenAI [TechCrunch]

AI warfare is already here [Bloomberg]

How businesses are actually using generative AI [The Economist]

Revealed: the names linked to ClothOff, the deepfake pornography app [The Guardian]

Alibaba’s new AI system ‘EMO’ creates realistic talking and singing videos from photos [VentureBeat]

⚙️Computer does

AI in the wild: how artificial intelligence is used across industry, from the internet, social media, and retail to transportation, healthcare, banking, and more

Omnicom is leveraging AI to optimize workflows with a virtual assistant [Business Insider]

Brave brings its AI browser assistant to Android. [The Verge]

Journalists used AI to trace German far-left militant well before police pounced [Reuters]

How one construction company is using AI to make workers more safe [Business Insider]

Gardin is using generative AI and synthetic data to drive plant growth — here's how [Business Insider]

Expect AI-made prescription drugs to be ready for clinical trials in a couple of years, predicts Google DeepMind CEO [Business Insider]

AI motoring cameras are trialled to police phone and seatbelt use [BBC]

Google wants to make Android users super-texters with new AI features [Business Insider]

Fashion brands using AI to analyze trends could 'completely transform the creative process,' a consultant says [Business Insider]

Honor’s Magic 6 Pro launches internationally with AI-powered eye tracking on the way [The Verge]

A.I. Frenzy Complicates Efforts to Keep Power-Hungry Data Sites Green [New York Times]

I used AI work tools to do my job. Here’s how it went. [Washington Post]

🧑🎓Computer learns

Interesting trends and developments from various AI fields, companies and people

Google Cloud links up with Stack Overflow for more coding suggestions on Gemini. [TechCrunch]

Apple has been ‘disturbingly quiet’ about AI and smartphones, IDC says [CNBC]

Generative AI as a speech coach [Axios]

With Brain.ai, generative AI is the OS [TechCrunch]

Brave’s Leo AI assistant is now available to Android users [TechCrunch]

Google DeepMind’s new generative model makes Super Mario–like games from scratch [MIT Technology Review]

Alibaba Unveils Big Cloud Price Cuts as AI Rivalry Deepens [Bloomberg]

Intel sees AI opportunity for standalone programmable chip unit [Reuters]

Consultants navigate regulatory sensitivities in the AI era [FT]

New legal AI venture promises to show how judges think [Reuters]

H2O AI releases Danube, a super-tiny LLM for mobile applications [VentureBeat]

Rural America is the new hotbed in the AI race as tech giants spend billions to turn farms into data centers [Business Insider]

Robotics Startups Hope the AI Era Means Their Time Has Come [Bloomberg]

Microsoft tailors AI for finance teams in specialized 'Copilot' strategy [Reuters]

Apple will ‘break new ground’ in generative AI this year, Tim Cook teases [9to5Mac]

Meta's Zuckerberg discusses mixed reality devices, AI with LG leaders in South Korea [Reuters]

Lightricks announces AI-powered filmmaking studio to help creators visualize stories [TechCrunch]

Meta Wants Llama 3 to Handle Contentious Questions as Google Grapples With Gemini Backlash [The Information]

SambaNova debuts 1 trillion parameter Composition of Experts model for enterprise gen AI [VentureBeat]

Adobe reveals a GenAI tool for music [TechCrunch]

Anamorph’s generative technology reorders scenes to create unlimited versions of one film [Reuters]

StarCoder 2 is a code-generating AI that runs on most GPUs [TechCrunch]

Abu Dhabi sovereign fund to invest space tech, AI this year [Reuters]

Nvidia, Hugging Face and ServiceNow release new StarCoder2 LLMs for code generation [VentureBeat]

SambaNova now offers a bundle of generative AI models [Reuters]

Morph Studio lets you make flims using Stability AI–generated clips [Reuters]

AI comes to the backyard barbecue—and could double the market size [Fortune]

AI models make stuff up. How can hallucinations be controlled? [The Economist]

Spain to develop open-source LLM trained in Spanish, regional languages [Sifted]

BBC to write headlines using artificial intelligence [The Telegraph]

AI video wars heat up as Pika adds Lip Sync powered by ElevenLabs [VentureBeat]

Microsoft’s GitHub Offers Companies Souped-Up AI Coding Tool [Bloomberg]

Stanford study outlines risks and benefits of open AI models [Axios]

Why do Nvidia’s chips dominate the AI market? [The Economist]

Apple just killed its electric car project, shifting focus to generative AI [ZDNet]

Klarna says its AI assistant does the work of 700 people after it laid off 700 people [Fast Company]

Mobile OS maker Jolla is back and building an AI device [TechCrunch]

Google Is Paying Publishers to Test an Unreleased Gen AI Platform [AdWeek]

UAE’s biggest company is adding an AI-powered observer backed by Microsoft to its board [Bloomberg]

Alibaba staffer offers a glimpse into building LLMs in China [TechCrunch]

Tumblr’s owner is striking deals with OpenAI and Midjourney for training data, says report [The Verge]

What makes Einstein Copilot a genius? Salesforce says it’s all about the data [VentureBeat]

Bankers Will See AI Transform Three-Quarters of Day, Study Says [Bloomberg]

OpenAI sales leader says ‘we see ourselves as AGI sherpas’ [VentureBeat]

Educators teaching AI literacy to students should include ethics in their lesson plans, scholars say [Business Insider]

OpenAI claims New York Times ‘hacked’ ChatGPT to build copyright lawsuit [The Guardian]

Writer unveils Palmyra-Vision, a multimodal AI to reimagine enterprise workflows [VentureBeat]

Instead of taking your job, AI might be more like the best intern ever [Business Insider]

Mistral AI releases new model to rival GPT-4 and its own chat assistant [TechCrunch]

Micron starts mass production of memory chips for use in Nvidia's AI semiconductors [Reuters]

GoDaddy launches a suite of AI tools for small businesses [Fast Company]

China’s Oppo unveils prototype AR glasses with AI voice assistant [CNBC]

Deutsche Telekom showcases app-less AI smartphone concept [Reuters]

FlowGPT is the Wild West of GenAI apps [TechCrunch]

SK Telecom partners with AI search startup Perplexity in Korea [Reuters]

Qualcomm unveils AI and connectivity chips at Mobile World Congress [VentureBeat]

Qualcomm’s on-device AI models will be hosted on Hugging Face and GitHub [The Verge]

Wall Street is loving AI while Big Tech is warning employees about how they use it [Business Insider]

Smartphone giants like Samsung are going to talk up ‘AI phones’ this year — here’s what that means [CNBC]

Nvidia launches RTX 500 and 1000 Ada Generation laptop GPUs for AI on the go [VentureBeat]

Zuckerberg’s Asia Tour to Range From AI to Ambani Wedding Party [Bloomberg]