Large models are pushing tech companies to rethink chip design; OpenAI unveils Sora generative video model; the world's first AI-powered Valentine's Day; ex-Salesforce CEO launches new startup

Adobe embraces AI across its products; Nvidia shows large model running on a personal computer; Slack brings generative AI to its products; AI is having a moment for business software

In April 2022, I received a message on LinkedIn from a senior analyst at a deep tech VC firm. She had read an old article of mine about the semiconductor supply chain and wanted to know if anything had changed since it was published it, particularly with the rising demand for AI chips.

I was reminded of that LinkedIn exchange this week, when I read a report in the Wall Street Journal that Sam Altman was seeking trillions in funding (or maybe just billions, according to Bloomberg) for a large project to develop new chips for artificial intelligence. So I’m going to use this newsletter to give you an updated version of what I shared with the VC analyst two years ago.

First, a bit of history: in the late 2000s, GPUs—originally designed for rendering graphics in games and visual simulations—started to become an important part of the modern AI infrastructure built by cloud providers and hyperscalers. Their parallel processing architecture, characterized by thousands of cores, enabled efficient computation of matrix operations central to deep learning algorithms. However, up to a few years ago, most of the AI workloads that ran in data centers were still handled by CPUs since they were easier to program and cheaper to source while GPUs were mostly reserved for research-type or experimentation work.

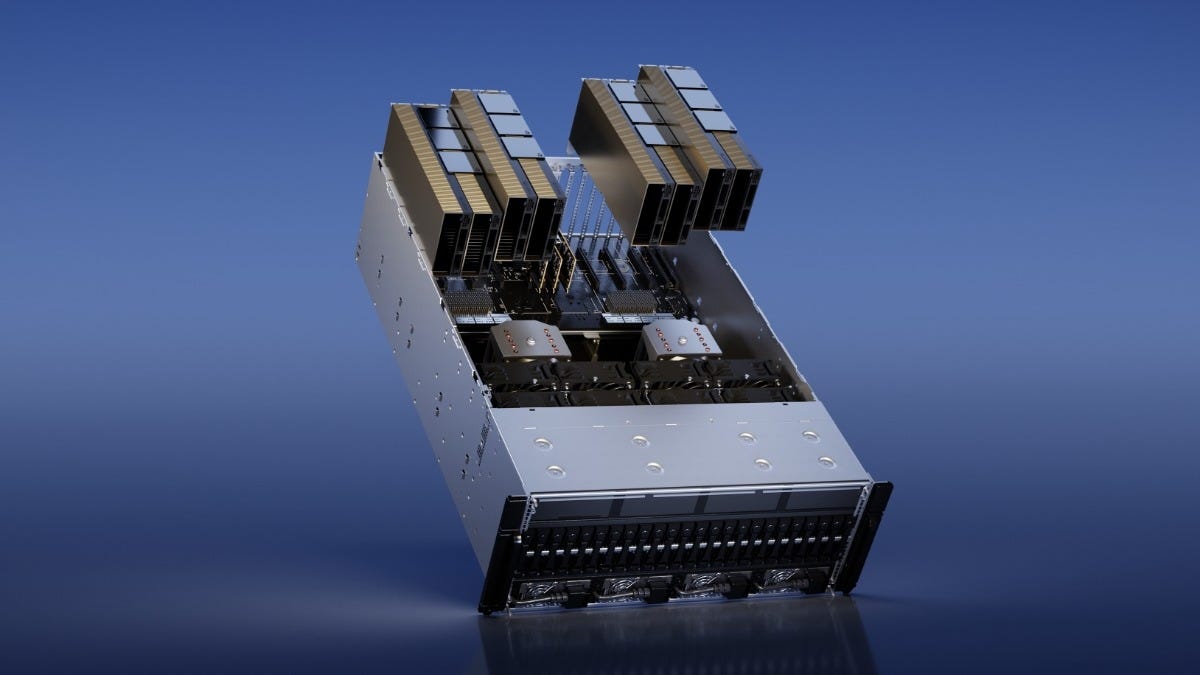

Transformer-based large models, represented by OpenAI's GPT series, Meta’s Llama or Google's Gemini, changed that CPU-GPU paradigm for AI almost overnight. These models leverage self-attention mechanisms to capture contextual dependencies and semantic relationships within data and consist of numerous layers and billions of parameters, requiring vast computational resources for training and inference. Secondly, large models scale very well in performance with compute: the more GPUs you have, the better your model will perform. This phenomenon, coupled with rising tensions in the Asia Pacific, sparked a buying frenzy among technology companies, leading to acute chip shortages around the world.

So it’s no wonder that Sam Altman is looking to diversify the global AI supply chain and create a hardware ecosystem that can support the exponential demand for compute from AI software companies. That said, designing an AI chip and the interconnect fabric capable of handling a large model with billion of parameters involves a myriad of costs, spanning from research and development to fabrication and testing. Here's a breakdown of the key cost components:

R&D expenditure: Developing an AI chip requires extensive R&D efforts, including architecture design, optimization, and simulation. This phase entails significant investment in human resources, specialized tools, and infrastructure, In total, a company would need to raise between 50 to 250 million dollars to cover the typical R&D costs of designing a performance-oriented chip.

IP licensing and royalties: Many AI chip designs incorporate intellectual property (IP) licensed from third-party providers, such as Arm. Acquiring these licenses and paying royalties add to the overall cost of chip development. The licensing fees vary based on the size and performance of a chip, but for server-type designs companies would be faced with spending north of 10 million dollars. The royalty is usually under 10% of the selling price of the chip.

Mask set and fabrication: Once the chip design is finalized, it needs to be fabricated onto silicon wafers using photolithography techniques. The creation of mask sets, which define the chip's layout and structure, incurs substantial expenses. Fabrication costs escalate with shrinking process nodes, as advanced technologies demand higher precision and complexity. A current-generation 3nm process for example requires hundreds of masks and one layer of mask costs about 100,000 dollars, pushing the mask sets into the 50 million dollars range.

Testing and validation: Ensuring the functionality and reliability of AI chips necessitates rigorous testing and validation procedures. This involves manufacturing test structures, conducting electrical tests, and debugging prototypes. Comprehensive testing contributes to both time and cost overheads.

Packaging and assembly: Following fabrication, AI chips undergo packaging and assembly processes to prepare them for integration into servers. Packaging costs depend on factors like chip size, complexity, and packaging materials. For a 3nm chip, the assembly and package related costs can easily add 30% to the total price.

Add up all the above costs and tack on the software work that needs to be done in terms of programming toolchains or operating system optimization, and you can see why building AI chips very quickly becomes a costly endeavor. The challenges are further amplified when targeting low-volume production, which is the typical case for AI chips for training (and to some extent, for inference too). Here are some reasons why producing low-volume chips is difficult:

Economies of scale: Semiconductor fabrication facilities, or fabs, operate most efficiently at high production volumes. Low-volume production runs result in higher unit costs due to underutilization of die manufacturing capacity and overhead expenses.

NRE costs: Non-recurring engineering (NRE) costs, incurred during the initial design and setup phases, remain fixed regardless of production volume. Spreading these costs over a small number of chips significantly inflates the per-unit cost for low-volume production runs.

Supply chain constraints: Semiconductor supply chains are optimized for high-volume production for consumer electronics devices such as TVs or smartphones, leading to challenges in sourcing materials, components, and equipment for low-volume projects. Limited availability and higher procurement costs further impede cost-effective production.

Design complexity: Developing AI chips entails intricate design methodologies and sophisticated manufacturing processes. Achieving optimal performance and power efficiency requires extensive optimization and validation efforts, which may not be economically viable for low-volume applications.

Despite the formidable costs and challenges associated with designing AI chips, ongoing advancements in semiconductor technology hold promise for mitigating these hurdles. Innovations such as chiplets, which allow modular integration of chip components, and emerging AI-assisted design and fabrication technologies offer potential avenues for cost reduction and scalability.

As the demand for specialized AI hardware continues to surge, balancing innovation with cost effectiveness will be crucial in driving the next wave of AI-powered devices and experiences. So while the 7 trillion dollar figure that the Wall Street Journal floated might be exaggerated, significant investments will be needed to avoid the very real scenario where the AI industry runs out of compute.

And now, here are the week’s news:

❤️Computer loves

Our top news picks for the week - your essential reading from the world of AI

OpenAI Unveils A.I. That Instantly Generates Eye-Popping Videos [New York Times]

Thanks to AI, Business Technology Is Finally Having Its Moment [WSJ]

The AI chat app being trialled in NSW schools which makes students work for the answers [The Guardian]

Early Adopters of Microsoft’s AI Bot Wonder if It’s Worth the Money [WSJ]

Meta’s AI Chief Yann LeCun on AGI, Open-Source, and AI Risk [Time]

Adobe’s Very Cautious Gambit to Inject AI Into Everything [Bloomberg]

Can OpenAI create superintelligence before it runs out of cash? [FT]

Chat With RTX brings custom local chatbots to Nvidia AI PCs [VentureBeat]

⚙️Computer does

AI in the wild: how artificial intelligence is used across industry, from the internet, social media, and retail to transportation, healthcare, banking, and more

Google Announces Free AI Cyber Tools to Bolster Online Security [Bloomberg]

Bulletin is a new AI-powered news reader that tackles clickbait and summarizes stories [TechCrunch]

Despite Deepfake and Bias Risks, AI Is Still Useful in Finance, Firms Told [Bloomberg]

AI means restaurants might soon know what you want to order before you do [Fortune]

Google will use AI and satellite imagery to monitor methane leaks [Engadget]

Norfolk council to use AI to answer some phone queries [BBC]

Can AI help maximize your credit card rewards? We turned to ChatGPT to find out. [Fortune]

When A.I. Bridged a Language Gap, They Fell in Love [New York Times]

How Blackstone is using AI to win over big insurance companies hungry for private credit [Business Insider]

AI analyses bird sounds for Somerset conservation project [BBC]

Galaxy AI features, including Live Translation, are headed to Galaxy Buds [ZDNet]

Looking for more intelligence from meetings? Otter.ai is taking a shot with ‘Meeting GenAI’ [VentureBeat]

🧑🎓Computer learns

Interesting trends and developments from various AI fields, companies and people

Advisory firm Ankura launches generative AI tool crafted with ChatGPT developer [Reuters]

OpenAI’s Sam Altman Seeks US Blessing to Raise Billions for AI Chips

AI Is Tutoring Students, but Still Struggles With Basic Math [WSJ]

Largest text-to-speech AI model yet shows ’emergent abilities’ [TechCrunch]

Kong’s new open source AI Gateway makes building multi-LLM apps easier [TechCrunch]

Armilla wants to give companies a warranty for AI [TechCrunch]

AI adoption increasing sales in telecoms sector, Nvidia report shows [Reuters]

Meta’s new AI model learns by watching videos [Fast Company]

A.I. Art That’s More Than a Gimmick? Meet AARON [New York Times]

Apple Readies AI Tool to Rival Microsoft’s GitHub Copilot [Bloomberg]

There is more to chat than just Q&A as Vectara debuts new RAG powered chat module [VentureBeat]

‘Game on’ for video AI as Runway, Stability react to OpenAI’s Sora leap [VentureBeat]

What tennis reveals about AI’s impact on human behaviour [The Economist]

Google’s new version of Gemini can handle far bigger amounts of data [MIT Technology Review]

Airbnb plans to use AI, including its GamePlanner acquisition, to create the ‘ultimate concierge’ [TechCrunch]

Google to set up new artificial intelligence (AI) hub in France [Reuters]

Databricks CEO Predicts Major Drop in AI Chip Prices [The Information]

AI is shaking up online dating with chatbots that are ‘flirty but not too flirty’ [CNBC]

Palantir CEO says there will be winners and losers in the world of artificial intelligence [CNN]

Apple researchers unveil ‘Keyframer’: An AI tool that animates still images using LLMs [VentureBeat]

Penn is the first Ivy League to offer a degree in AI [Business Insider]

Andrej Karpathy confirms departure (again) from OpenAI [VentureBeat]

Google quietly launches internal AI model named 'Goose' to help employees write code faster, leaked documents show [Business Insider]

Cohere for AI launches open source LLM for 101 languages [VentureBeat]

What comes after Stable Diffusion? Stable Cascade could be Stability AI’s future text-to-image generative AI model [VentureBeat]

OpenAI upgrades ChatGPT with persistent memory and temporary chat [VentureBeat]

Mozilla downsizes as it refocuses on Firefox and AI [TechCrunch]

How LLMs are learning to differentiate spatial sounds [VentureBeat]

Google and Anthropic Are Selling Generative AI to Businesses, Even as They Address Its Shortcomings [WSJ]

Why Big Tech’s watermarking plans are some welcome good news [MIT Technology Review]

Why AI makers don't tell their chatbots to answer only what they know [Business Insider]

"AI native" Gen Zers are comfortable on the cutting edge [Axios]

Nvidia CEO Says Tech Advances Will Keep AI Cost in Check [Bloomberg]

See inside the AI-powered fitness studio that's led by virtual trainers [Business Insider]

Nvidia wants to work with the world's most powerful tech companies to make customized AI chips [Business Insider]

If you're looking for a job, the most important skill is adaptability amid the rise of AI and ChatGPT [Business Insider]

UK’s AI Safety Institute ‘needs to set standards rather than do testing’ [The Guardian]

The unexpected sign that AI may soon take your job: Higher pay [Fortune]

Tech companies axe 34,000 jobs since start of year in pivot to AI [FT]

Microsoft Copilot AI climbs Google, Apple app store charts after Super Bowl ad [VentureBeat]

The Super Bowl’s best and wackiest AI commercials [Ars Technica]

Google pledges 25 million euros to boost AI skills in Europe [Reuters]

Fan wiki hosting site Fandom rolls out controversial AI features [The Verge]

As more governments look at AI rules, Accenture tests software to help companies comply [Semafor]

Managers, employees turn to ChatGPT to write performance reviews [Axios]